OpenAI has announced that its new 'GPT-4 Turbo' model is now available to paid ChatGPT users.

Our new GPT-4 Turbo is now available to paid ChatGPT users. We've improved capabilities in writing, math, logical reasoning, and coding.

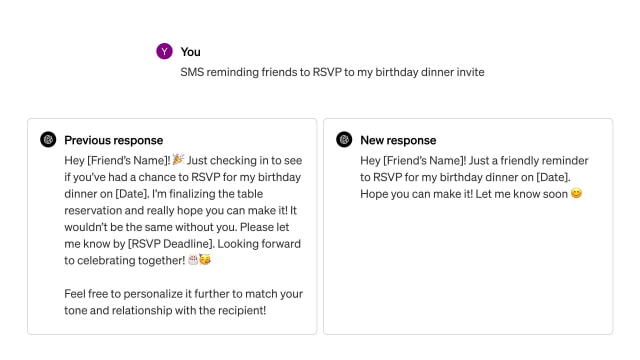

The company says that when writing with ChatGPT, responses will now be more direct, less verbose, and use more conversational language. Additionally, the model has a more current data set that includes information up to December 2023.

"We continue to invest in making our models better and look forward to seeing what you do. If you haven't tried it yet, GPT-4 Turbo is available in ChatGPT Plus, Team, Enterprise, and the API."

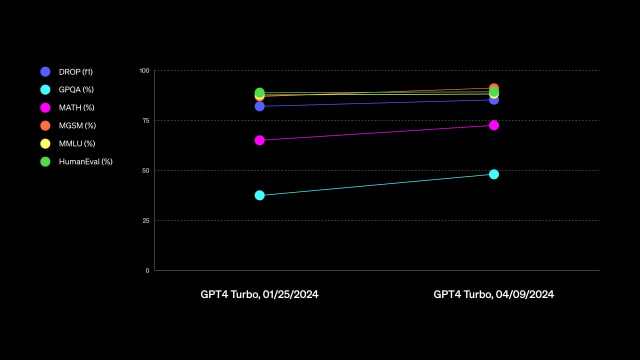

OpenAI has also created a new lightweight library for evaluating language models and shared the results of GPT-4 vs GPT-4 Turbo in the following evals...

● MMLU: Measuring Massive Multitask Language Understanding

● MATH: Measuring Mathematical Problem Solving With the MATH Dataset

● GPQA: A Graduate-Level Google-Proof Q&A Benchmark

● DROP: A Reading Comprehension Benchmark Requiring Discrete Reasoning Over Paragraphs

● MGSM: Multilingual Grade School Math Benchmark (MGSM), Language Models are Multilingual Chain-of-Thought Reasoners

● HumanEval: Evaluating Large Language Models Trained on Code

● MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI

You can try out ChatGPT at the link below...

Read More

Our new GPT-4 Turbo is now available to paid ChatGPT users. We've improved capabilities in writing, math, logical reasoning, and coding.

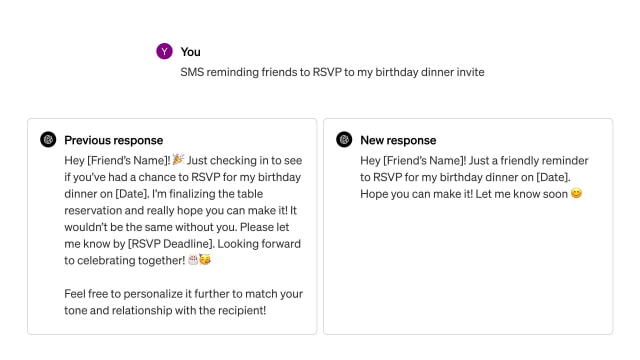

The company says that when writing with ChatGPT, responses will now be more direct, less verbose, and use more conversational language. Additionally, the model has a more current data set that includes information up to December 2023.

"We continue to invest in making our models better and look forward to seeing what you do. If you haven't tried it yet, GPT-4 Turbo is available in ChatGPT Plus, Team, Enterprise, and the API."

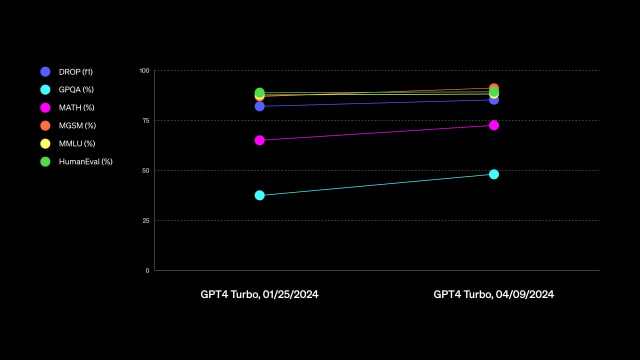

OpenAI has also created a new lightweight library for evaluating language models and shared the results of GPT-4 vs GPT-4 Turbo in the following evals...

● MMLU: Measuring Massive Multitask Language Understanding

● MATH: Measuring Mathematical Problem Solving With the MATH Dataset

● GPQA: A Graduate-Level Google-Proof Q&A Benchmark

● DROP: A Reading Comprehension Benchmark Requiring Discrete Reasoning Over Paragraphs

● MGSM: Multilingual Grade School Math Benchmark (MGSM), Language Models are Multilingual Chain-of-Thought Reasoners

● HumanEval: Evaluating Large Language Models Trained on Code

● MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI

You can try out ChatGPT at the link below...

Read More